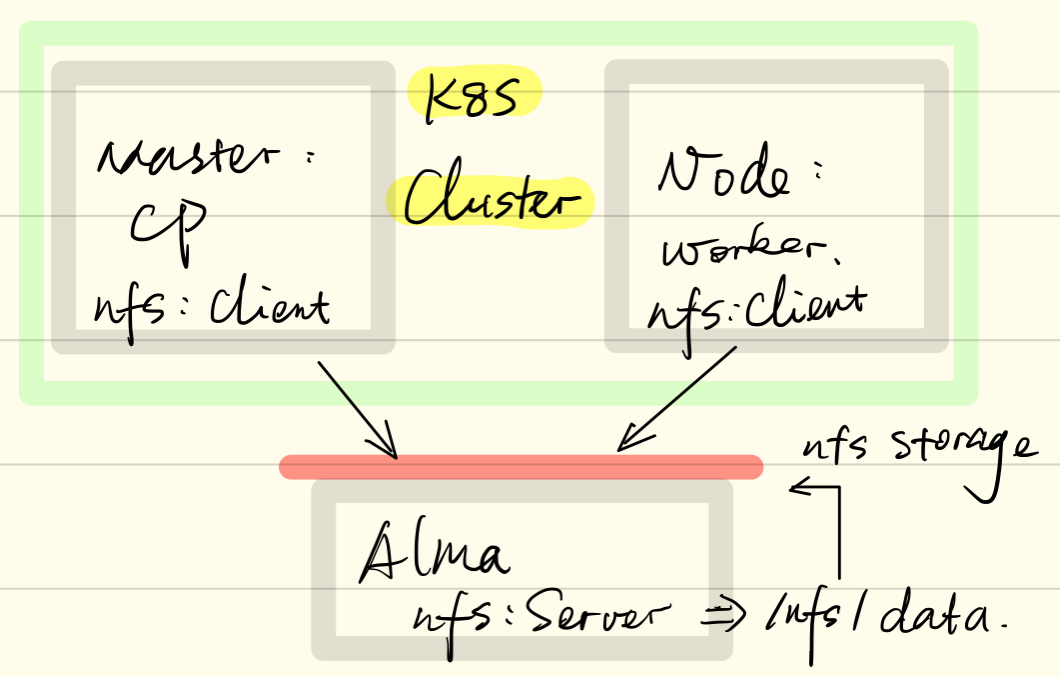

环境

Alma:Linux Alma 8.5

CP/Worker:ubuntu 18.04

NFS安装

Alma:

#安装

yum install -y nfs-utils

#nfs主节点

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

mkdir -p /nfs/data

systemctl enable rpcbind --now

systemctl enable nfs-server --now

#配置生效

exportfs -r

ubuntu:

#安装client

zyi@worker:~$ sudo apt install nfs-common

#查看nfs

zyi@worker:~$ showmount -e alma

Export list for alma:

/nfs/data *

测试步骤

原生方式

nfs卷的内容在删除 Pod时会被保存,卷只是被卸载。

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-native-storage

name: nginx-native-storage

spec:

replicas: 2

selector:

matchLabels:

app: nginx-native-storage

template:

metadata:

labels:

app: nginx-native-storage

spec:

containers:

- image: networktool:v1.1

name: nginx-web

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes: #直接在vlumes下nfs参数挂载

- name: html

nfs:

server: alma

path: /nfs/data/nginx-pv #nfs中此文件夹必须事先存在,否则pod建立不了

[root@Alma ~]# echo 'POD for Native-Storage' > /nfs/data/nginx-pv/index.html

zyi@cp:~$ kubectl describe po nginx-native-storage-99bd9bd8c-r6r4j

Name: nginx-native-storage-99bd9bd8c-r6r4j

Namespace: default

Priority: 0

Node: worker/172.16.21.111

Start Time: Mon, 21 Feb 2022 02:58:44 +0000

Labels: app=nginx-native-storage

pod-template-hash=99bd9bd8c

Annotations: cni.projectcalico.org/containerID: a3211ca74ede2738b4fc6d37456d17e68b53120743f1dc27bb1e7e6aad2dc98e

cni.projectcalico.org/podIP: 192.168.171.113/32

cni.projectcalico.org/podIPs: 192.168.171.113/32

Status: Running

IP: 192.168.171.113

IPs:

IP: 192.168.171.113

Controlled By: ReplicaSet/nginx-native-storage-99bd9bd8c

Containers:

nginx-web:

Container ID: docker://1d6b3194ea115bcc9cd91d36cf4067192a13486c82b9cea6a1f39f43b9774501

Image: networktool:v1.1

Image ID: docker://sha256:293c239dd855827d9037ee498ad57b0e09147fe3324b39e8a48f7d5547d1e7e9

Port: <none>

Host Port: <none>

State: Running

Started: Mon, 21 Feb 2022 02:58:49 +0000

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/usr/share/nginx/html from html (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-2mf8w (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

**html:

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: alma

Path: /nfs/data/nginx-pv

ReadOnly: false**

kube-api-access-2mf8w:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>

zyi@cp:~$ curl 192.168.171.113

POD for Native-Storage

静态PV

- 创建pv池

#Alma

[root@Alma ~]# mkdir /nfs/data/pv01

[root@Alma ~]# mkdir /nfs/data/pv02

[root@Alma ~]# mkdir /nfs/data/pv03

- 创建pv和storageclass

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01-100m

spec:

capacity:

storage: 100M

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/pv01

server: alma

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv02-1gi

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/pv02

server: alma

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv03-3gi

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/pv03

server: alma

storageclass:nfs

静态pv:

- pv01-100M—>alma:/nfs/data/pv01;

- pv02-1Gi—>alma:/nfs/data/pv02;

- pv03-3Gi—>alma:/nfs/data/pv03

- 创建pod并绑定pv

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deploy-pvc

name: nginx-deploy-pvc

spec:

replicas: 2

selector:

matchLabels:

app: nginx-deploy-pvc

template:

metadata:

labels:

app: nginx-deploy-pvc

spec:

containers:

- image: networktool:v1.1

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

persistentVolumeClaim:

claimName: nginx-pvc

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 200Mi

storageClassName: nfs

- 以上配置包括pvc建立和pod绑定;

- pvc引用了之前配置的storageclas;

- pvc需要的容量为200m,所有会选用pv02

zyi@cp:~$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01-100m 100M RWX Retain Available nfs 16h

pv02-1gi 1Gi RWX Retain Bound default/nginx-pvc nfs 45m

pv03-3gi 3Gi RWX Retain Available nfs 16h

zyi@cp:~$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nginx-pvc Bound pv02-1gi 1Gi RWX nfs 31s

pv的回收策略是:Retain,当pod删除的时候,pv不会被删除。 如果要删除pv,需要手动介入。

保留(Retain) 回收策略

Retain使得用户可以手动回收资源。 当 PersistentVolumeClaim 对象被删除时,PersistentVolume 卷仍然存在,对应的数据卷被视为"已释放(released)"。 由于卷上仍然存在这前一申领人的数据,该卷还不能用于其他申领。

管理员可以通过下面的步骤来手动回收该卷:

- 删除 PersistentVolume 对象。与之相关的、位于外部基础设施中的存储资产(例如 AWS EBS、GCE PD、Azure Disk 或 Cinder 卷)在 PV 删除之后仍然存在。

- 根据情况,手动清除所关联的存储资产上的数据。

- 手动删除所关联的存储资产。

如果你希望重用该存储资产,可以基于存储资产的定义创建新的 PersistentVolume 卷对象。

动态PV

- 创建storageclass

#设置ServiceAccount

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default #根据实际环境设定namespace,下面类同

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

---

#配置StorageClass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: alma-nfs-sc

provisioner: alma-nfs #这里的名称要和provisioner配置中的环境变量PROVISIONER_NAME保持一致

parameters:

archiveOnDelete: "false"

---

#配置provisioner

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default #与RBAC中的namespace保持一致

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: alma-nfs #provisioner名称,请确保该名称与 nfs-StorageClass配置中的provisioner名称保持一致

- name: NFS_SERVER

value: alma #NFS Server IP地址

- name: NFS_PATH

value: /nfs/data/sc #NFS挂载卷

volumes:

- name: nfs-client-root

nfs:

server: alma #NFS Server IP地址

path: /nfs/data/sc #NFS 挂载卷

zyi@cp:~$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

alma-nfs-sc alma-nfs Delete Immediate false 4s

- 修改default storageclass

zyi@cp:~$ kubectl patch storageclass alma-nfs-sc -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

storageclass.storage.k8s.io/alma-nfs-sc patched

zyi@cp:~$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

**alma-nfs-sc (default)** alma-nfs Delete Immediate false 7m6s

zyi@cp:~$ kubectl describe sc alma-nfs-sc

Name: alma-nfs-sc

IsDefaultClass: Yes

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{},"name":"alma-nfs-sc"},"parameters":{"archiveOnDelete":"false"},"provisioner":"alma-nfs"}

,**storageclass.kubernetes.io/is-default-class=true**

Provisioner: alma-nfs

Parameters: archiveOnDelete=false

AllowVolumeExpansion: <unset>

MountOptions: <none>

ReclaimPolicy: Delete

VolumeBindingMode: Immediate

Events: <none>

zyi@cp:~$

- 测试StorageClass

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

annotations:

volume.beta.kubernetes.io/storage-class: "alma-nfs-sc" #与nfs-StorageClass的 metadata.name保持一致

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

zyi@cp:~$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nginx-pvc Bound pv02-1gi 1Gi RWX nfs 138m

test-claim Pending alma-nfs-sc 16m

查看logs for nfs-pod

zyi@cp:~$ kubectl logs nfs-client-provisioner-c9f8dbb58-pdngj

I0221 05:31:26.150158 1 leaderelection.go:185] attempting to acquire leader lease default/alma-nfs...

E0221 05:31:43.632142 1 event.go:259] Could not construct reference to: '&v1.Endpoints{TypeMeta:v1.TypeMeta{Kind:"", APIVersion:""}, ObjectMeta:v1.ObjectMeta{Name:"alma-nfs", GenerateName:"", Namespace:"default", SelfLink:"", UID:"62fda3da-1914-4287-949f-fbe9a81746e2", ResourceVersion:"1188463", Generation:0, CreationTimestamp:v1.Time{Time:time.Time{wall:0x0, ext:63781017992, loc:(*time.Location)(0x1956800)}}, DeletionTimestamp:(*v1.Time)(nil), DeletionGracePeriodSeconds:(*int64)(nil), Labels:map[string]string(nil), Annotations:map[string]string{"control-plane.alpha.kubernetes.io/leader":"{\"holderIdentity\":\"nfs-client-provisioner-c9f8dbb58-pdngj_8361e4a2-92d7-11ec-9b02-4277e0456c6e\",\"leaseDurationSeconds\":15,\"acquireTime\":\"2022-02-21T05:31:43Z\",\"renewTime\":\"2022-02-21T05:31:43Z\",\"leaderTransitions\":1}"}, OwnerReferences:[]v1.OwnerReference(nil), Initializers:(*v1.Initializers)(nil), Finalizers:[]string(nil), ClusterName:""}, Subsets:[]v1.EndpointSubset(nil)}' due to: 'selfLink was empty, can't make reference'. Will not report event: 'Normal' 'LeaderElection' 'nfs-client-provisioner-c9f8dbb58-pdngj_8361e4a2-92d7-11ec-9b02-4277e0456c6e became leader'

I0221 05:31:43.632308 1 leaderelection.go:194] successfully acquired lease default/alma-nfs

I0221 05:31:43.633958 1 controller.go:631] Starting provisioner controller alma-nfs_nfs-client-provisioner-c9f8dbb58-pdngj_8361e4a2-92d7-11ec-9b02-4277e0456c6e!

I0221 05:31:43.734591 1 controller.go:680] Started provisioner controller alma-nfs_nfs-client-provisioner-c9f8dbb58-pdngj_8361e4a2-92d7-11ec-9b02-4277e0456c6e!

I0221 06:03:32.316844 1 controller.go:987] provision "default/nginx-sc" class "alma-nfs-sc": started

E0221 06:03:32.358963 1 controller.go:1004] provision "default/nginx-sc" class "alma-nfs-sc": unexpected error getting claim reference: selfLink was empty, can't make reference

I0221 06:16:43.646567 1 controller.go:987] provision "default/nginx-sc" class "alma-nfs-sc": started

E0221 06:16:43.654139 1 controller.go:1004] provision "default/nginx-sc" class "alma-nfs-sc": unexpected error getting claim reference: selfLink was empty, can't make reference

I0221 06:18:35.976603 1 controller.go:987] provision "default/test-claim" class "alma-nfs-sc": started

E0221 06:18:35.998650 1 controller.go:1004] provision "default/test-claim" class "alma-nfs-sc": unexpected error getting claim reference: selfLink was empty, can't make reference

I0221 06:31:43.647355 1 controller.go:987] provision "default/nginx-sc" class "alma-nfs-sc": started

I0221 06:31:43.647862 1 controller.go:987] provision "default/test-claim" class "alma-nfs-sc": started

E0221 06:31:43.656246 1 controller.go:1004] provision "default/nginx-sc" class "alma-nfs-sc": unexpected error getting claim reference: selfLink was empty, can't make reference

E0221 06:31:43.661489 1 controller.go:1004] provision "default/test-claim" class "alma-nfs-sc": unexpected error getting claim reference: selfLink was empty, can't make reference

找了找资料发现,kubernetes 1.20版本 禁用了 selfLink。

资料连接:

https://stackoverflow.com/questions/65376314/kubernetes-nfs-provider-selflink-was-empty

https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner/issues/25

当前的解决方法是编辑/etc/kubernetes/manifests/kube-apiserver.yaml

#Under here:

spec:

containers:

- command:

- kube-apiserver

#Add this line:

- --feature-gates=RemoveSelfLink=false

zyi@cp:~$ sudo systemctl restart kubelet.service

zyi@cp:~$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nginx-pvc Bound pv02-1gi 1Gi RWX nfs 143m

test-claim Bound pvc-3fc64d8f-5821-4e4e-a4e4-193cc4ef7878 1Mi RWX alma-nfs-sc 21m

- 在Deployment/Pod引用storageclass

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deploy-sc

name: nginx-deploy-sc

spec:

replicas: 2

selector:

matchLabels:

app: nginx-deploy-sc

template:

metadata:

labels:

app: nginx-deploy-sc

spec:

containers:

- image: networktool:v1.1

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

persistentVolumeClaim:

claimName: nginx-sc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-sc

labels:

app: nginx-sc

spec:

accessModes:

- ReadWriteMany

storageClassName: alma-nfs-sc #增加本环境的存储类

resources:

requests:

storage: 2Gi

zyi@cp:~$ kubectl apply -f deployment-sc.yaml

deployment.apps/nginx-deploy-sc created

persistentvolumeclaim/nginx-sc created

zyi@cp:~$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01-100m 100M RWX Retain Available nfs 19h

pv02-1gi 1Gi RWX Retain Bound default/nginx-pvc nfs 3h21m

pv03-3gi 3Gi RWX Retain Available nfs 19h

**pvc-3447bc4d-7029-497b-871a-7800a95fe823 2Gi RWX Delete Bound default/nginx-sc alma-nfs-sc 11s**

zyi@cp:~$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nginx-pvc Bound pv02-1gi 1Gi RWX nfs 156m

**nginx-sc Bound pvc-3447bc4d-7029-497b-871a-7800a95fe823 2Gi RWX alma-nfs-sc 13s**

zyi@cp:~$ kubectl get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

back-app-6b5f87d447-d4dgj 1/1 Running 0 3d19h 192.168.171.109 worker <none> <none>

back-app-6b5f87d447-v8tqz 1/1 Running 0 3d19h 192.168.171.108 worker <none> <none>

front-app-544b8cdb7f-7hnf7 1/1 Running 0 3d21h 192.168.171.103 worker <none> <none>

front-app-544b8cdb7f-dmdvz 1/1 Running 0 3d21h 192.168.171.104 worker <none> <none>

front-app-544b8cdb7f-kf9x9 1/1 Running 0 3d21h 192.168.171.102 worker <none> <none>

nfs-client-provisioner-c9f8dbb58-pdngj 1/1 Running 3 82m 192.168.171.117 worker <none> <none>

nginx-deploy-pvc-756dcd469-gsjg2 1/1 Running 0 156m 192.168.171.115 worker <none> <none>

nginx-deploy-pvc-756dcd469-h2c74 1/1 Running 0 156m 192.168.242.82 cp <none> <none>

**nginx-deploy-sc-798f6f5cd5-8225r 1/1 Running 0 29s 192.168.171.119 worker <none> <none>

nginx-deploy-sc-798f6f5cd5-bk44c 1/1 Running 0 29s 192.168.242.84 cp <none> <none>**

nginx-native-storage-99bd9bd8c-lvdkl 1/1 Running 0 3h54m 192.168.171.112 worker <none> <none>

nginx-native-storage-99bd9bd8c-r6r4j 1/1 Running 0 3h54m 192.168.171.113 worker <none> <none>

在nfs的共享目录下发现了k8s新建的文件夹:

[root@Alma ~]# ls /nfs/data/sc/ -l

total 4

drwxrwxrwx. 2 root root 6 Feb 21 14:52 default-nginx-sc-pvc-3447bc4d-7029-497b-871a-7800a95fe823

[root@Alma ~]# ll /nfs/data/sc/default-nginx-sc-pvc-3447bc4d-7029-497b-871a-7800a95fe823/

total 0

[root@Alma ~]# echo 'POD for StorageClass alma-nfs-sc!' > /nfs/data/sc/default-nginx-sc-pvc-3447bc4d-7029-497b-871a-7800a95fe823/index.html

更新了index.html内容后就可以看到:

zyi@cp:~$ curl 192.168.242.84

POD for StorageClass alma-nfs-sc!

zyi@cp:~$ curl 192.168.171.119

POD for StorageClass alma-nfs-sc!